Cassandra's Write Path: Behind the Scenes

Cassandra is an open-source, distributed, NoSQL database that belongs to the Column Family NoSQL database category. Cassandra can handle massive amounts of write read and scale to thousands of nodes.

Cassandra characteristics…

1. Based on Amazon’s Dynamo and Google’s Big Table.

2. Based on Peer to Peer architecture. Every node performs read and writ No Master single point of failure, No Master-slave.

3. Tunable read and write consistency for both read and write

4. Scale horizontally by partitioning all data stored in the system using consistent hashing

5. Gossip protocol for inter-node communication.

6. Supports automatic partitioning and replication.

Coordinator: When a request is sent to the Cassandra node, this node acts as a coordinator between the application(Cassandra client) and the nodes that will handle the write operation. Any node in the cluster can take the role of Coordinator.

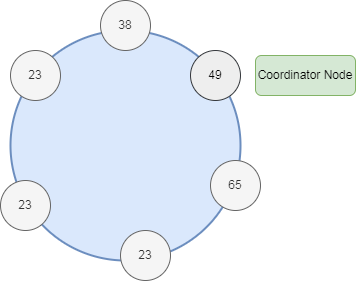

Partitioner: Each node in the Cassandra cluster(also called ring) is assigned a range of tokens. Cassandra distributes the data across each node using a consistent hashing algorithm. Virtual nodes(vnodes) are also used where each node owns more number of the small token range. It helps in distributing the load evenly when a new node is added or removed.

The partitioner takes care of distributing the data across the node in the cluster based on the row key in the request. It applies the hash function on the partition key and gets the token. Based on the token partitioner knows which node is going to handle the request.

Cassandra offers 3 types of partitioners.

1. Murmur3Partitioner

2. RandomPartitioner

3. ByteOrderedPartitioner

Murmur3Partitioner is the default partitioner in Cassandra clusters.

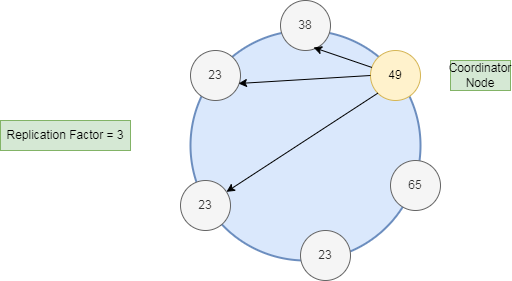

Replication Strategy: The Coordinator uses the Replication strategy to find replica nodes for a given request.

Two replication strategies are available:

Simple Strategy — This is for single data center deployment. It does not consider network topology. Not recommended for production environment.

Network Topology Strategy — It also takes the partition’s decision and places the remaining replica clockwise. It also considers rack and data center configurations.

Replication Factor: It specifies the number of replicas Cassandra will hold for the table in different nodes. If RF = 3 is selected, data will be replicated in 3 nodes in the cluster.

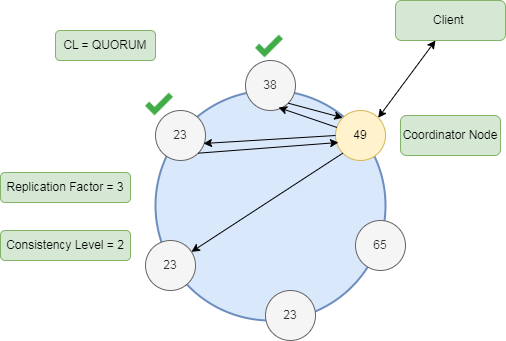

Consistency Level for WRITE: It specifies the number of replica nodes that must be acknowledged for the successful Write. After the acknowledgment of the required number of replica, the Coordinator reply to the client.

Different types of Write consistency levels:

ANY, ONE, TWO, THREE, QUORUM, LOCAL_QUORUM, EACH_QUORUM, ALL

WRITE request in Cassandra:

The client sends the write request to the Cassandra cluster using the Cassandra driver in the application. The coordinator receives the request and calls the Partitioner Hash function by passing the Partition Key. Also based on the configured Replication Strategy, it determines the replica nodes(REPLICATION_FACTOR) that will write the data. from the row to generate a token by calling the Hash function.

Lets say, REPLICATION_FACTOR = 3 and WRITE_CONSISTENCY_LEVEL = 2

Next Step, The Coordinator will check with the fault detector that all three 3 replica is available.

If all the replicas are available, the Coordinator will asynchronously forward the WRITE request to all the 3 replica nodes.

Write request reaches to replica nodes, below steps takes place.

1. Data will be first written to the Commit log. It ensures data durability, Write request data will permanently survive even in case of node failure.

2. Next, Write Data is written to Memtable which is in the memory cache for buffering the write. Eventually, memtables are flushed onto disk and become immutable SSTables.

Once WRITE_CONSISTENCY_LEVEL replica acknowledges the write(in this case, 2) Coordinator will reply to the client. Write success means data is written to Commitlog and Memtable.

If WRITE_CONSISTENCY_LEVEL is set to 3(instead of 2), the Coordinator will wait for the 3rd replica to acknowledge and reply to the client once an acknowledgment is received from 3 replicas.

Suppose, only 2 replica is available out of 3 required replica, the Request will still be forwarded to 2 replica because the available replica(2) is not less than WRITE_CONSISTENCY_LEVEL(2). The coordinator will perform the same writing process as explained with one extra step called hinted hand, The Coordinator will write the request data locally and keep it for 3 hours(default). The coordinator will wait for the replica node to come up and send the hint to the replica so that the replica can apply the write and become consistent with another replica. If the replica node is not online within the period then Read repair is needed for the replica.